The blog post marks the end of a long hiatus in my blog writing. I’m starting back with a post about language assessment in general & placement tests in particular, & I’ll compare & contrast two English as a Foreign or Second Language (EFL/ESL) placement test assessment methods.

Context

I recently had the opportunity to compare & contrast two EFL/ESL assessment methods: semi-structured interviews & discreet-point multiple choice question (MCQ) tests. Both tests were administered to 475 international students who attended an EFL/ESL summer school in Canada. A sample size of n=475 gives a reasonably strong statistical power & this comparison took place in a typical summer school context.

From many years of experience of English language assessment in general & placement testing in particular, I believed that the semi-structured interview would be the more appropriate score to use to assign students to cohorts in similar levels of English language proficiency at the summer school. Semi-structured interviews place the main emphasis on communicative competencies, which are the ultimate goal of language learning. In other words, they’re a more direct form of assessment than typical structurally focused tests. Consequently, students were placed in their classes based entirely on their performance in the semi-structured interviews & only minor adjustments for incorrect student placement had to be made, mostly due to inter-rater reliability errors & that some students had under-performed due to jet-lag &/or test anxiety.

It is preferable to assess communicative competence at international summer schools because they bring together young students from around the world into mixed first language & nationality classes, making English the lingua franca that everyone has in common. In other words, English is the only language in which they can all understand each other & make themselves understood. Summer schools are an ideal opportunity to put into practice the English language that students have been studying year-round & further develop their communication skills. To accommodate this opportunity, English language summer schools often promote learning & teaching methods, such as communicative language teaching (CLT), task-based language learning (TBLL), content & language integrated learning (CLIL), & project-based learning (PBL), which place a strong emphasis on student-to-student cooperation & communicative competence.

Comparison of The Assessment Methods

Firstly, the semi-structured interviews & the discreet-point MCQ test assess different aspects of linguistic knowledge & ability (Table 1, below).

- Semi-structured interviews assess students’ overall production of coordinated, integrated language skills & communicative competence, i.e. their ability to understand & make themselves understood in English & their ability to maintain a conversation through taking turns & giving appropriate responses.

- Discreet-point MCQ tests primarily assess students’ ability to recognise correctly & incorrectly formed language structures, appropriate selection of vocabulary, & sometimes appropriate responses to given cues, prompts, &/or questions.

Table 1: A comparison of aspects of language assessed by discreet-point MCQs & semi-structured interviews

| Discreet-point MCQs | vs. | Semi-structured Interviews |

| Test language recognition | Test language production | |

| Closed, tightly controlled | Open-ended, spontaneous | |

| Pre-defined | Adaptive | |

| Isolated knowledge & skills | Coordinated, integrated knowledge & skills | |

| Often focus on structural aspects (Usage) | Focus on communicative competence (Use) |

Details of the Two Tests

Discreet-Point MCQ test

The grammar test was a typical structural, norm-referenced, progressive discreet-point MCQ test, consisting of 50 items, of the type & quality frequently used in summer schools & language academies.

It’s worth noting that the design & development of effective MCQ language tests is challenging & requires a high degree of expertise. They require highly-skilled, careful, well-informed item writing, frequent statistical analyses on students’ responses to test items, e.g. facility index, discrimination index, & distractor efficiency. In this sense, effective MCQ tests are developed through an iterative process rather than designed.

In contrast, administering MCQ tests is relatively straightforward & easy to grade, e.g. untrained staff members can grade the tests with an answer key or even computer administered & graded tests can be implemented.

So MCQ tests require high expertise, time, & effort to develop but low expertise to administer & grade.

The Semi-Structured Interview

The speaking test was a semi-structured interview with communicative competencies & language functions as outlined in the Common European Framework of Reference for Languages (CEFR). The range of the speaking test was subdivided into 12 levels of language functions & communicative competencies, which is more fine-grained than typical placement tests, aligned with Trinity College London’s Graded Examinations in Spoken English (GESE), as indicated in Table 2 (below).

Table 2: Correspondence between CEFR & speaking test levels.

| CEFR | Level |

| A1.1 | 1 |

| A1.2 | 2 |

| A2.1 | 3 |

| A2.2 | 4 |

| B1.1 | 5 |

| B1.2 | 6 |

| B2.1 | 7 |

| B2.2 | 8 |

| B2.3 | 9 |

| C1.1 | 10 |

| C1.2 | 11 |

| C2 | 12 |

In contrast to the discreet-point MCQ test, the semi-structured interviews required qualified, experienced teachers to administer each interview, for an average of 5 minutes per interview. Testers were provided with a criterion-referenced rubric & some additional visual materials to act as stimuli in order to elicit language, particularly from lower-proficiency students.

The exact duration of each interview mostly depends on the students’ language proficiency. The lower the proficiency of the student, the less time the interview would take & conversely, the higher the level, the longer the interview. For example, a cohort of 150 students would take 10 teachers about 60 minutes to interview (150 * 5 / 10 = 75) but if the levels are skewed towards the lower end, which is often the case on EFL/ESL summer schools, they would take substantially less.

Administering the speaking test is therefore more time-consuming & requires a larger number of more highly-qualified & experienced testers. However, the design is relatively quick as it relies to a high degree on the testers’ expertise in order to judge students’ language proficiency.

Comparison of the Test Results

As mentioned earlier, the semi-structured interviews were a valid & reliable predictor of students’ ability to participate in classes.

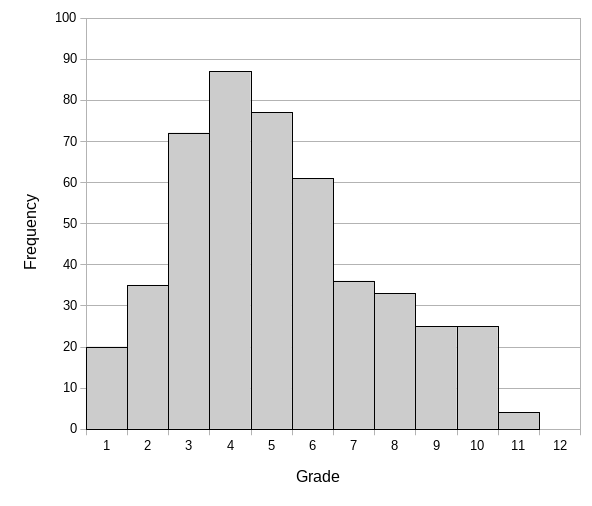

A frequency analysis (Figure 1, below) revealed that the distribution of grades was indeed skewed towards to lower end of the English proficiency scale, centred at around level 4 (CEFR A2.2).

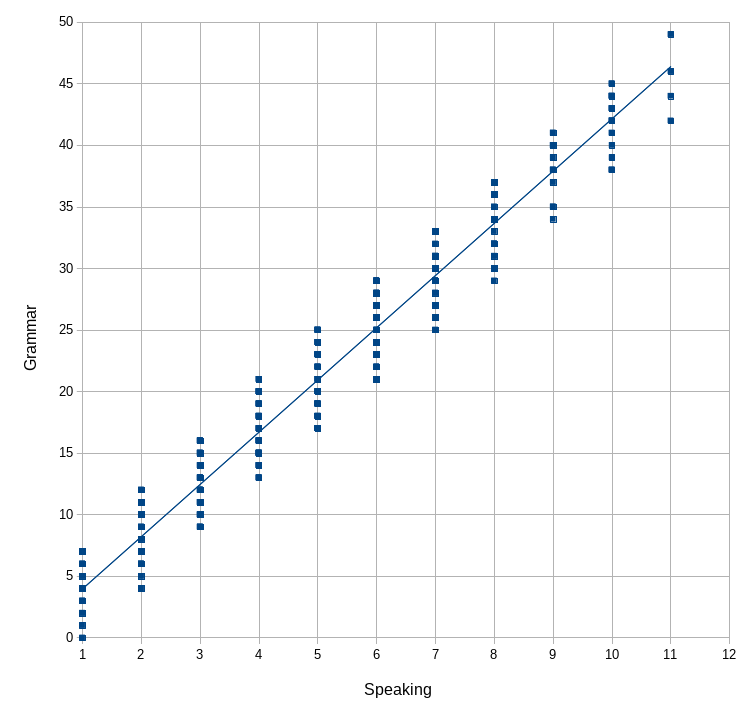

A scatter-plot of the test results for each student is a quick & easy to interpret way to compare each student’s score with their speaking test score. Each dot represents one or more students’ scores with their speaking test score on the x-axis (horizontal) & their grammar test score on the y-axis (vertical). If there were a strong correlation between the speaking & grammar tests, we would expect to see a relatively close grouping along a diagonal line from bottom left to top right (Figure 2, below).

But what we see from the analysis of the actual test results a wide variation between the semi-structured interviews & the discreet-point MCQ test (Figure 3, below).

For example, the students who scored 48-50 in the discreet-point MCQ test, scored across a range of levels 4-11 (CEFR A2.2-C1.2) in the semi-structured interviews. This shows error margins of up to 9 levels when compared to the semi-structured interviews. Using the discreet-point MCQ test results to place students in classes would result in students with level 4 & 11 being placed in the same class. In other words, the MCQ test was a poor predictor of students’ communicative ability or their ability to participate in the appropriate level of class at the summer school.

This analysis of the test results clearly illustrates how pronounced the difference typically is between assessing grammatical competence & assessing communicative competence.

Where Does This Leave Discreet-Point MCQ Tests?

This is not entirely a critique of the discreet-point MCQ test format per se. These tests can be designed better & to assess a wider range of linguistic knowledge skills. The problem in this case, which is not atypical either, is that the items within the test focused almost entirely on structural aspects of language, i.e. grammatical competence, with too little attention given to how language should be used from a functional perspective, i.e. communicative competence.

As mentioned above, effective MCQ tests require highly-skilled & experienced item writers & are resource intensive & time-consuming to develop. Additionally, it’s even more challenging to develop MCQ items which assess many of the constituent knowledge & skills that make up communicative competencies. The question remains as to whether developing a discreet-point MCQ test that’s appropriate for EFL/ESL summer schools is a feasible goal. The first step is in understanding where the challenges lie & developing item-writing strategies the meet them.